Architecture After AI

University of Texas at Austin

Symposium February 3

Exhibit Jan 30—February 24

In certain circles, 2022 will be remembered as the Year of the Text-to-Image Model. Last April, OpenAI launched DALL-E 2, an update to DALL-E released the previous year, which produces highly detailed and often surrealistic images from text descriptions in a matter of seconds. (The name is a portmanteau of the Pixar character WALL-E and the artist Salvador Dalí). Fast on DALL-E 2’s heels came Stable Diffusion, Midjourney, Imagen, and other “deep learning” models that essentially do the same thing. The computer architectures that enable these platforms are not new but were previously only used by small groups of specialists. Now, they are accessible to anyone with a vocabulary to produce the sort of computer-generated images that up to now have been the domain of trained visualization specialists.

With the release of these machine learning models, the worlds of art and architecture went bananas with a mix of handwringing (the robots are coming for our jobs!) and jubilation (the power at our fingertips is almost overwhelming!). Over the past few months, several conferences and exhibitions have attempted to help cut through the numinous fog of this first blush of excitement and begin to stake out theoretical guideposts to help understand these new technologies and the effects they may have on the practice of architecture. These have included the Neural Architecture Exhibit and Symposium at the Taubman College in Michigan in November and several panels at the 2022 ACADIA conference. Most recently, The University of Texas at Austin School of Architecture (UTSoA) hosted an exhibition and symposium titled Architecture After AI.

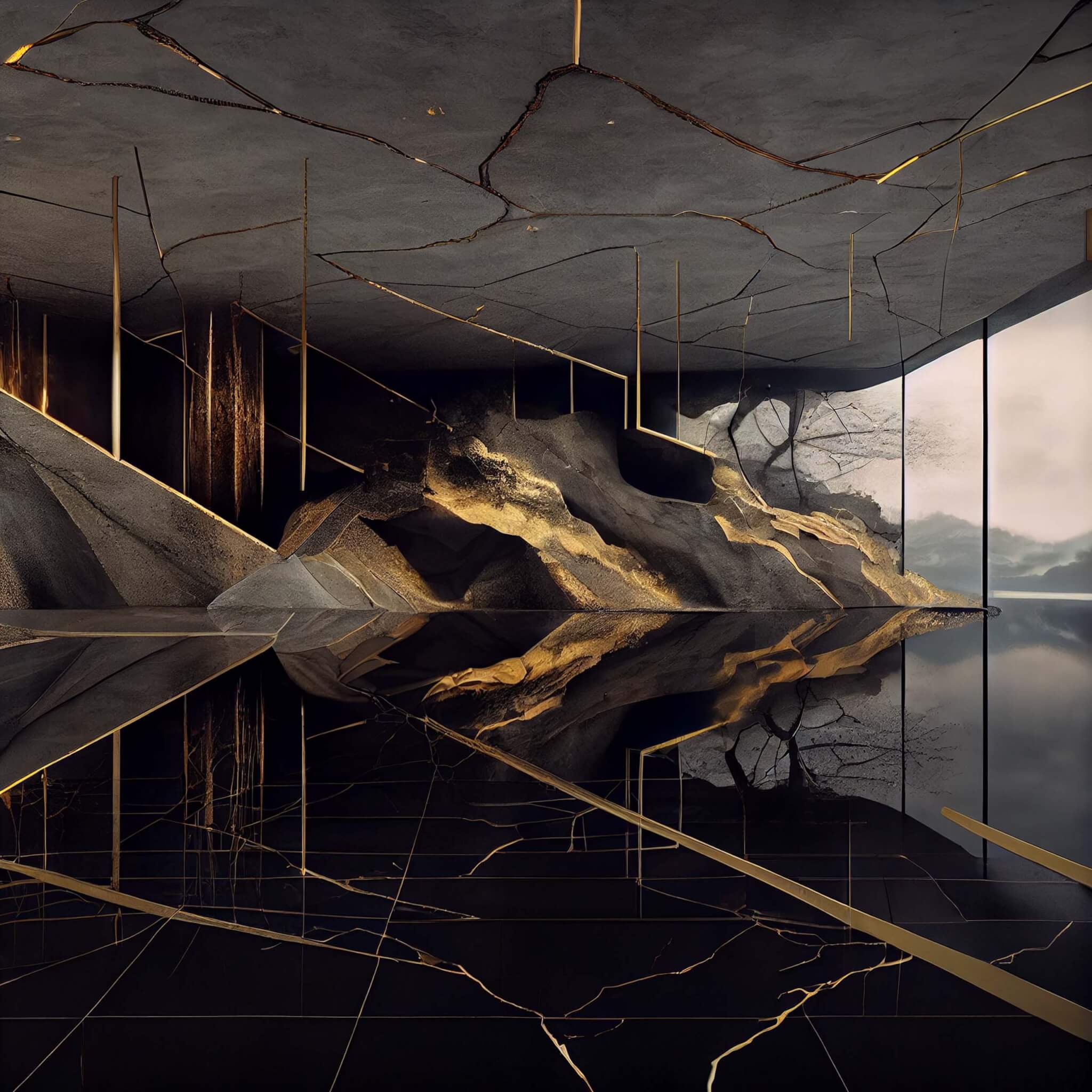

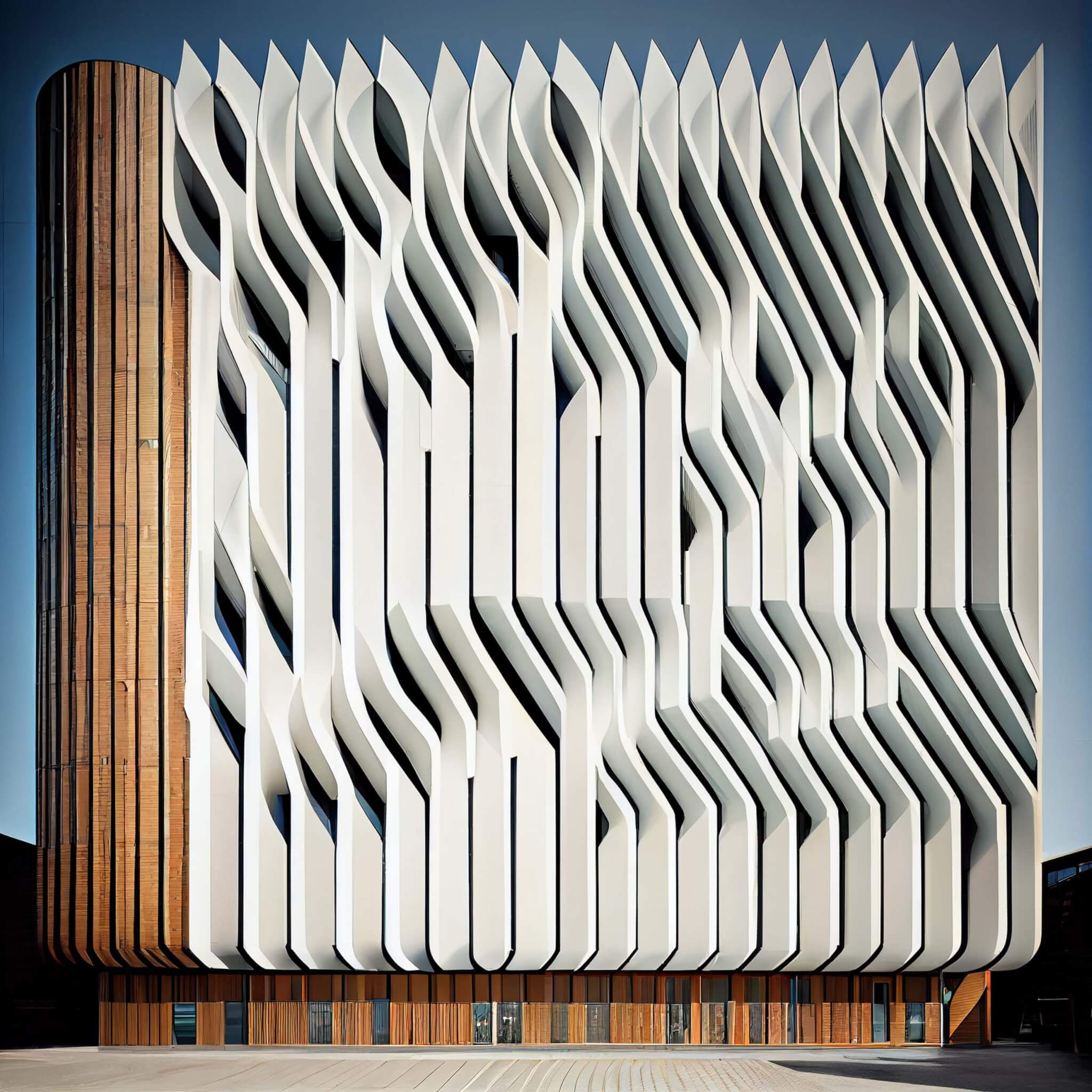

Organized by UTSoA professors Kory Bieg, Daniel Koehler, and Clay Odom, the exhibit collects approximately 2,000 text-to-image AI-generated pictures submitted by roughly 60 architecture academics and practitioners from around the world, including the curators themselves. The images are shown in an array of automatically scrolling slideshows projected onto the walls of UTSOA’s Mebane Gallery. Each image appears for a few seconds before being replaced by the one next in the queue, a dizzying stream that points out the enormous quantity of pics people using these models are churning out. Content wise, most of the images lean toward utopian/dystopian architectures—it’s hard to tell the difference—festooned or intergrown with plants and other natural features. Others are more tongue-in-cheek, depicting gonzo vernaculars, bizarre juxtapositions, sly literary allusions, and other inside jokes. Some seem to verge on a critique of text-to-image AI, drawing attention to unresolved details, the impossibility of actually building these architectural visions, or just how wide of the mark the generated image is from the prompt. As far as I could tell, the only unifying trait was that the co-authors of these images seemed to be having a lot of fun making them. There’s clearly an aspect of play involved and, recognizing that, it’s hard not to be charmed—and harder yet to take any of it seriously as a prediction for the future of the built environment.

At the related symposium, however, the organizers made the case that something more serious than play is going on here. Koehler proposed that the advent of text-to-image AI—and chatbots like ChatGPT—constitutes a watershed moment in the history of architecture that is fundamentally changing humanity, “in the same way printed books changed us.” Bieg said architecture has reached a “singularity” at a time when it is also in crisis and quoted a line from Gottfried Semper’s Style in the Technical and Tectonic Arts about the arts experiencing phoenix-like rebirths through the process of their own destruction—suggesting that AI platforms may be both destroyer of the status quo and progenitor of a fresh architectural future. The presenters, all of whom work in some way with AI, offered a variety of perspectives on what might lay on the other side of this historical turning point, if that’s what it is.

Andrew Kudless, founder of Matsys and professor at the University of Houston, pointed out that the current generation of AI platforms is the first digital technology to emerge in the last 30 years that focuses on expanding imagination and creativity instead of increasing the efficiency of production. He went on to show that this expansion of imagination isn’t open ended. Since these platforms are trained on datasets—often, captioned images scraped from the web—what they produce skews toward specific associations and aesthetics contained within the data and the culture that produces it. For example, asking for image resolutions outside of what a model is trained on can send it wildly off course, while if you feed it a meaningless prompt it will tend to drift toward what it knows: In the case of Midjourney, images of young, white, steampunk women, usually, and disturbingly, with cuts and bruises on their faces. When you ask the same prompt but request to not see women it gives you spaceships and other sci-fi vehicles. While unsettling quirks like these could be fixed over time with more training data and other adjustments, Kudless did warn against a drive toward total resolution and fidelity to reality. “I think the value of generative AIs is in their ability to produce ambiguity and incoherent assemblages of form and atmosphere,” he said, comparing the process of using these platforms in design to the iterative process of exploring through sketching.

Jenny Sabin, principal of Jenny Sabin Studio and professor at Cornell, argued that AI is fundamentally human. As such, it’s quite capable of reproducing and reinforcing our more troubling dominant characteristics, like misogyny and racism. She said that while text-to-image AI opens new possibilities for complex form finding, there are other realms of AI that could benefit architecture, for example, radically changing workflows in design and pedagogy; analyzing mistakes in construction and improvising fixes; baking sustainability into cities; and—teeing up her own work—building empathetic and healing architecture that can read and respond to users. Sabin presented one of her projects that explores how architecture, in collaboration with AI, can be empathetic and responsive to humans. Named Ada, after the polymath and early computer programmer Ada Lovelace, it was designed in collaboration with Microsoft Research. Comprising a blobby cellular framework and knitted tubes outfitted with sensors, fiberoptics, and LEDs, Ada uses AI algorithms to process “sentiment data”—smiles, frowns, etc.—into color and light that ripple throughout its translucent volume like a cephalopod or jellyfish. It’s fun to think about an architecture that smiles back at you, but also a little unsettling to contemplate the same architecture broadcasting your shitty mood to anyone nearby.

Designer, professor, writer, musician, and multimedia artist Ed Keller, who teaches at Rensselaer Polytechnic Institute and Parsons, gave a rhapsodic speech that was more about the experience—or structure of feeling—of modernity in its latest technological clothes than addressing AI as a tool for architecture specifically. He spoke of the weirdness of living in a time when so much from science fiction is becoming reality, a flourishing he likened to the Cambrian explosion, a J-curve represented in his own work by the fact that during the past few months working with generative AI he has “created” more images than in the previous 30 years as a designer. What is the value of this eruption of content? Keller said that all of the images produced are valuable as data points to feed back into the machine, meaning that the computer itself may be the main audience. He also mused that when a handful of designers working with AI tools can, in a few months’ time, exceed the entire history of accumulated human production up to that point, that output becomes “like tears in the rain.” The elephant in the room, Keller said, is the singularity, a moment when non-human ontologies become the dominant forces in our world. Our challenge, then, as we confront AI tools that though currently less than conscious may be building toward some form of consciousness, becomes akin to learning to talk to aliens.

Kyle Steinfeld, associate professor of architecture at UC Berkeley, brought us back down to earth with a socio-technical history of text-to-image AI. The first bit of cloud he shewed away was the notion that the subject of the conference is artificial intelligence. True AI hasn’t been invented yet, he said. The current generation of chatbots and text-to-image platforms can be more accurately described as generative statistics, or linear algebra. (If that makes it clearer for anyone, please email me). That said, Steinfeld does see some encouragement in these developments because they may lead technology to fulfill the promises of CAD, made since 1963, of actually being a good collaborative creative partner in design. The current generation of CAD tools force people into their ways of producing, whereas text-to-image offers the possibility that machine learning design tools will start to think more like human designers. AI research also got its start in the 1960s, but didn’t really takeoff until the 2000s, when computing power approached the power needed and the world started producing and storing oceans of data. Deep machine-learning models of the sort that enable text-to-image platforms were developed at this time to deal with the problem of processing and storing all this data. These machine-learning architectures were then put into image generators by a community of amateur artists who experimented with the tools and bragged about their successes on Reddit. As for whether these AI platforms will wreck existing creative cultures, Steinfeld suggested computer visualization specialists might have to be worried because their craft has become accessible to anyone. Whatever disruption these technologies create in the job market, however, text-to-image AI undoubtedly renders architectural image making easy, fast, and therefore far less valuable than it has was the past.

Marta Nowak, founder of transdisciplinary design studio AN.ONYMOUS- and assistant professor at Ohio State University, followed Steinfeld with a presentation of work she has done with AI in architectural space. She began with a brief history of machines built to mimic animal and human behaviors, a timeline that culminated in the first application of AI in IBM’s supercomputer Deep Blue, which in 1997 triumphed over Gary Kasparov in a game of chess (It lost to the grandmaster the previous year). She then showed work she produced in a studio cotaught with Greg Lynn at UCLA that studied how today’s robots—like the Roomba—move through space, as well as how they visualize objects and people around them. This research led her to projects she worked on with Google R&D to design flexible, hybrid office spaces that use AI to facilitate line-of-sight connection in remote/in-person meetings; create workspaces that adjust to individual worker preferences with the swipe of a badge, adjusting desk height, pulling up family photos, and even adjusting HVAC settings; and program robotic partition walls that move autonomously through open office spaces, unfurling to provide privacy where needed. Rather than use AI to design buildings, Nowak said her interest is in giving “artificial intelligence to objects, architectural components, and buildings and allow them to continually interact and respond to their human users—not to create intelligently designed spaces, but to create intelligent space.”

The final presentation was by artist Sofia Crespo and her collaborator at Entangled Others Studio, Feileacan McCormack. They use AI as a tool to extract patterns and rearrange them for aesthetic effects. Their work focuses on facets of the natural world, particularly marine organisms and insects, and involves feeding data about these creatures, which are either scraped from the web or recorded directly from field research, into generative adversarial networks that then produce digital models. The results range from virtual petting zoos of individual specimens to fleshed out ecosystems with multiple “lifeforms” interacting with each other. The critters are not exact replicas of extant species, but rather bizarre golems whose forms, textures, and movements are shaped as much by the machine’s lack of understanding of what it’s trying to model as the data it does receive. “The lack of data becomes part of the artistic statement,” Crespo said. “AI can’t imagine what these creatures look like unless it gets a sample… The project is shaped by the lack of something.” While fascinating to look at, the work still takes a back seat to what anybody who cares to look closely can find outside. “You can scarcely ever generate an insect that doesn’t get completely knocked off stage by the sheer diversity and creativity in natural selection out there,” McCormack said. “What we can do is use this as a way of mapping the data we have available to us about the insectile world, in this case very little, but still within just that tiny fragment we can find so much fantastic and curious forms to explore.”

If this is the dawn of a new age of AI-enabled human experience, it is a rather curious juncture. We are, it seems, both astounded by the sheer immensity of the computational power now in the hands of everyday people while at the same time being a little nonplussed by the results. Already I identify a sense of fatigue with the predictability and homogeneity of the pictures squirting out of text-to-image generators, like so many sausages from the sausage maker; and the current forays into autonomous, mobile, and even responsive architectures seem little more than excessive and boring IRL extensions of the internet’s robust surveillance tech. We already have laptops and smartphones tracking our every movement, mood, and desire, do we really need chairs to rush beneath our bottoms before we sit down or canopies that cry when we feel blue? AI seems to have the potential to help us deal with many of the thorny crises of our epoch—mitigating climate change, repairing decaying infrastructure, planning sustainable cities—but enabling us to pump out feverishly psychedelic imagery with the most basic of prompts doesn’t seem to be one of them. Perhaps text-to-image modeling is no more than another tech bro’s attempt to corner a market, and so perhaps it’s one we can go without. Of course, thinkers since the 19th century have been telling us that the trajectory of modernism will lead us inexorably into the maw of the singularity, whether it benefits us or not. We may as well enjoy the ride.